Learning Python 3 threading module

The threading module that comes with Python is very nice to use. It is a bit scary at the beginning, but becomes a handy tool to attach to your Python toolbelt after a while.

What are threads?

In the past threads used to be referred at as "lightweight tasks". I like to consider that I have a main program that can fire tasks in the background and collect the result later when I need it.

When you launch a Python program you end up with a new process that contains one thread called the main thread. If you need to, you can launch additional tasks that will run concurrently.

One very common example is having the main thread of a program wait for remote connections and handle each new client in a separate thread.

Threads are managed by the operating system. Under the hood, most of the times, the pthreads C library is used by a program to ask the OS to create new threads. This is how CPython interacts with threads.

Scary threads

Threads have a key feature that makes them extremely powerful but also hard to use: threads share the same memory space.

That makes threads lightweight because creating a new thread, only means allocating a tiny bit of memory. Just enough memory to hold the thread stack and the bookkeeping done by the kernel. We are talking about a few kilobytes of RAM per thread.

However it also means that every thread of the same process can access everything from the others. A thread that holds a socket for instance, cannot keep it for itself. At any moment another thread can access this socket, modify it, close it, destroy it and so on.

A lot of smart people have worked hard on this problem, and they all tend to agree: threaded code is hard.

Thread safety

Threads are difficult but they have been around for ages and a lot of programs tend to use them anyway. So it must be possible to work with them somehow, right?

The answer is yes, some nice developers made reusable tools and libraries that cooperate well with threads. The fact that an API avoids most pitfall related to threads makes this API thread safe.

In Python thread safety is often achieved by avoiding shared mutable state. Meaning that threads avoid modifying the data they put in common.

Sometimes it's impossible to completely avoid shared mutable state, so instead threads collaborate to make sure they are either:

- all reading the data and no one is writing it

- a single thread is writing and no one else is reading

These two points are the key to understanding threads.

As a side note, they are also the leitmotiv of the Rust borrow checker. Rust is a language that guaranties at compile time that a program is thread safe. If you often write threaded code, Rust is probably the best tool you can hang at your belt.

The hard thing about threads in Python is that it is impossible to prove the safety of a program. Unit testing doesn't help, static analysis may raise some warnings but the only way to tackle the problem is to be careful.

CPython, GIL and atomic operations

The Internet is full of incorrect facts about Python and threads. The worst one is not the famous one saying that Python cannot use threads - at least if you don't use them you are not using them incorrectly. No the dangerous one is the one saying that Python provides thread safety by default thanks to the Global Interpreter Lock.

The GIL is an implementation detail of CPython (the most common implementation of the Python language that most people refer to as just "Python"). The GIL is there to protect CPython internals from issues related to thread safety.

Can Python use threads?

Yes absolutely.

CPython uses native OS threads but the GIL makes sure that only one thread executes some Python bytecode at a time. It makes Python a good platform for IO bound applications (sockets, HTTP, files...), less for CPU bound ones.

CPU heavy applications cannot be written in pure Python and run on CPython while leveraging multiple cores. There are ways to bypass the GIL for CPU bound programs:

- Write some of the code in C and release the GIL, like Numpy.

- Use multiple processes instead of multiple threads.

So the GIL makes Python code thread safe?

Not at all.

The GIL does not bring thread safety to the user's Python code but makes some basic Python operations atomic. Other implementations of Python, like Pypy do not bring the same guaranties.

What is more, even in CPython it is a slippery slope. Some operations on dicts are atomic and some aren't, it becomes very hard to verify the safety of code that relies on these implementation details.

Thread safety in practice

Before looking at some code, let's remind how to access shared data between thread safely:

- some threads are reading the data and no one is writing it

- a single thread is writing and no one else is reading

Common patterns with threads

APIs

The previous example shows how to protect a variable from data races. While it works, it couldn't be exposed as part of an API. It would be awkward to tell the users of the API to "remember to always lock the data before accessing it".

A solution for that is to encapsulate the locking logic so that it is not visible to the end user. This technique is used in the standard Python logging module for example.

class DataHasher:

def __init__(self, data):

self._data = data

self._lock = threading.Lock()

def hash(self):

with self._lock:

self._data = sha512(self._data).hexdigest().encode()

def get(self):

with self._lock:

return self._data

An instance of this class can be safely shared among threads without requiring the user to care about locking:

data = DataHasher(b'Hello' * 1000)

for i in range(10):

threading.Thread(target=data.hash).start()

The get method returns a reference of bytes. As bytes are immutable in Python, the user can then do whatever he wants with this reference without impacting DataHasher. However when handing references to mutable objects, the contract between the API and the user must be clear.

The user can either be told to not mutate the data, or he can be given a defensive copy of the data:

def get(self):

from copy import deepcopy

with self._lock:

return deepcopy(self._data)

Defensive copies are not wildly used: they add a performance penalty even when the user has no intention of mutating the data and some objects cannot be copied safely (file handlers, sockets).

Producer - Consumer

The producer consumer pattern is very common in Python. The standard library provides a thread safe queue for this purpose.

import threading

import queue

import time

q = queue.Queue()

def append_to_file():

while True:

to_write = q.get()

with open('/tmp/log', 'a') as f:

f.write(to_write + '\n')

def produce_something():

for i in range(5):

q.put(threading.current_thread().name)

time.sleep(1)

threading.Thread(target=append_to_file, daemon=True).start()

threading.Thread(target=produce_something).start()

threading.Thread(target=produce_something).start()

The code is fairly simple, it creates a queue, two threads that push their names into that queue and a consumer that writes everything that comes from the queue into a file.

The daemon=True argument passed to the consumer thread allows the program to exit when other threads finished their work.

Notice that this code does not explicitly do any locking thanks to the queue encapsulating the heavy work internally.

Poison pills

Daemon threads are abruptly killed when other non-daemonic threads exit. As the documentation mentions, it means that they do not clean up their resources before exiting the program. In the previous example it means that all messages may not be fully written when the consumer is stopped.

There a two main ways to allow the consumer to finish its job:

- Make the consumer periodically check if an Event happened

- Send a poison pill in the queue. When the consumer find the pills it stops itself.

import threading

import queue

import time

q = queue.Queue()

must_stop = threading.Event()

def append_to_file():

with open('/tmp/log', 'a') as f:

while True:

data = q.get()

if data is must_stop:

print('Consumer received the poison pill, stopping')

return

f.write(data + '\n')

f.flush()

def produce_something():

for i in range(5):

q.put(threading.current_thread().name)

time.sleep(1)

threading.Thread(target=append_to_file).start()

p1 = threading.Thread(target=produce_something)

p1.start()

p1.join()

q.put(must_stop)

This snippet uses the poison pill pattern, the must_stop event is sent to the consumer when the producers exit.

Thread Pool

The creation of a thread can be considered a costly operation. When a program has a lot of short lived tasks it can save some CPU instructions by reusing existing threads that are done with their tasks. This is the job of a thread pool.

Instead of managing manually the life-cycle of threads, the standard library provides an easy to use implementation of a thread pool. The concept is simple, a pool of threads that can grow up to N threads is created. Each thread takes a task, processes it, and repeat the operation until all tasks are processed.

The official documentation provides an easy to understand example of distributing work to a thread pool.

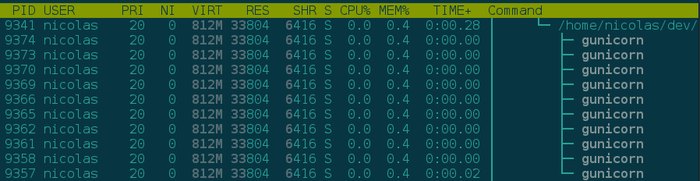

Two very common libraries in the Python ecosystem extend this concept: Celery and Gunicorn can both operate in a mode where a master process pre-launches N threads that are used when a task or a request arrives.

Gunicorn manages ten threads, waiting to handle HTTP requests.

Going further

The Concurrent Execution part of the Python documentation provides a description of all the goodies Python provides out of the box for writing multi-threaded code.

As often in programming, the best way to learn is to read code written by others.

Questions

What is the cost of a thread?

Mostly some memory, the exact amount depends on a lot of factors. It is at least 32 KB per thread, but often much more.

When the OS decides to switch the execution from one thread to another, a bit of time is spent on context switching. In Python this is mostly negligible compared to the rest of the operations.

A decent computer with a recent OS can easily handle ten thousand threads simultaneously.

As discussed previously, threads also have a cost on development. The complexity added by switching from a single threaded program to a multi threaded one shouldn't be overlooked.

Should I go for threads or asynchronous IO in Python?

Given everything that happens currently in the asynchronous Python world it's easy to get lost.

Twisted, Tornado, Gevent, asyncio, curio, and many more... The Python ecosystem is still struggling to find a framework that the community will agree on. Don't get me wrong, all these framework are actually usable, you can build successful businesses with them. They just have some rough edges and a support for third party libraries that goes from good to abysmal.

On the other hand, third party libraries consider synchronous threaded Python code as their first class citizen. It means that if you decide to forget about asynchronous code you can benefit from very solid bases to start your work.

The same goes for developers. Finding people to work on a synchronous code base is relatively easy: every Python developer is expected to know how to do it. Finding people that have experience in cooperative multitasking can be more difficult.

Aren't threads a thing of the past?

If you look at popular languages right now it's easy to think so. Nodejs runs an event loop in a single process without threads. The Go language hides threads away from the programmer, giving him an easier to use interface for concurrency.

However threads are still the go to solution for many tasks, like accessing file systems. Linux does not provide an asynchronous API for that. Even some projects relying heavily on asynchronous IO sometimes have to use threads, Nginx for instance had to implement a thread pool to prevent the event loop from being slowed down by unresponsive disks.

It is also worth mentioning that CPU frequencies stopped their rise, instead we get more and more cores. Cores that can be used by either processes or their lightweight alternative: threads.